Is Inputting Client Data into AI a Data Breach?

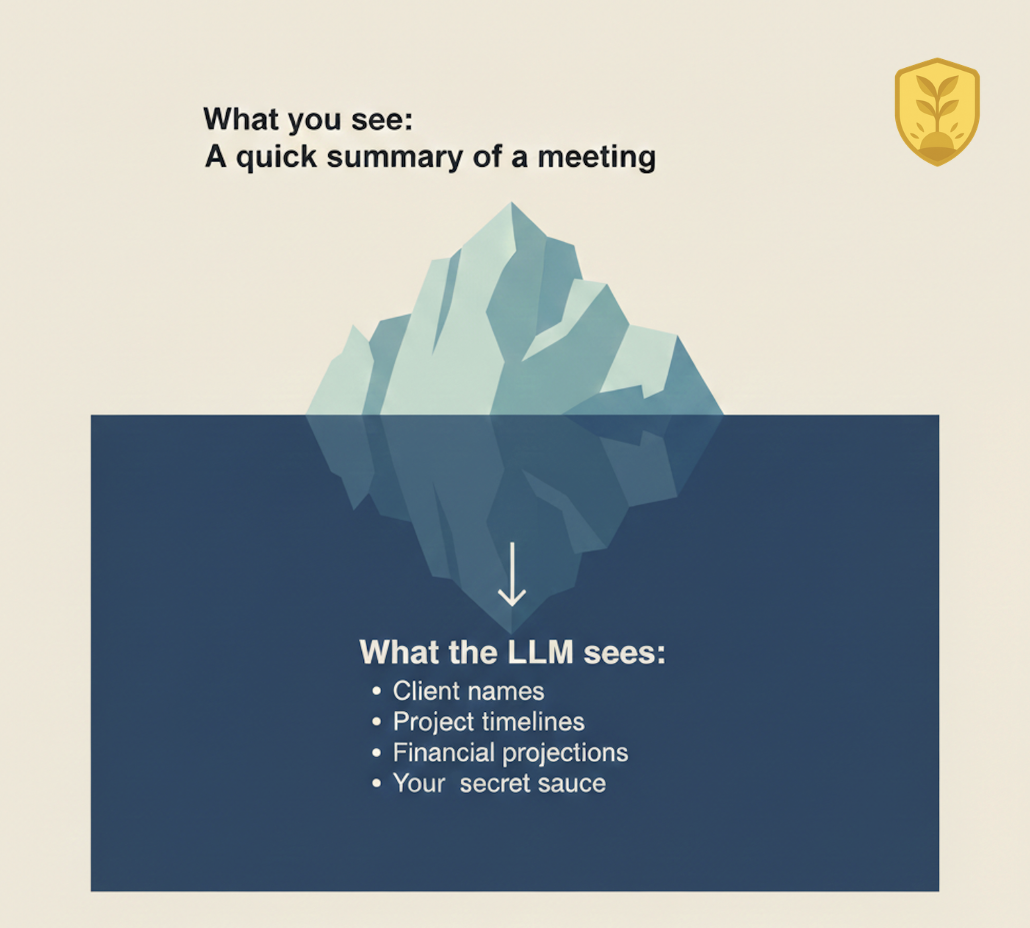

The speed of AI tools like ChatGPT and Gemini is addictive. It is incredibly tempting to summarize a messy transcript or draft a client report in seconds. However, for professionals handling sensitive information, that convenience comes with a hidden question: Are you inadvertently causing a data breach every time you click "send"?

In many cases, the answer is a quiet but firm yes.

Redefining the Breach

When we think of a "data breach," we usually imagine hackers in hoodies. But in the age of Large Language Models (LLMs), a breach is often just an unintended disclosure. If you use a public AI tool, you are essentially handing information over to a third-party provider. Unless you have a specific enterprise agreement in place, that data is no longer under your control. It can be:

Absorbed into the model: The AI may use your input to "learn," potentially surfacing your client's details in a response to a stranger's query later.

Stored externally: Your proprietary data lives on servers you don't manage, often without the client's knowledge and without data sovereignty.

Reviewed by humans: AI companies often use human contractors to review logs for quality control.

Real-Life Case: The Samsung Source Code Leak

In 2023, engineers at Samsung accidentally leaked sensitive corporate secrets through ChatGPT. In three separate incidents, employees pasted top-secret source code and internal meeting notes into the AI to help them fix bugs and create summaries.

Because they were using the standard version of the tool, that data became part of the AI's training set. Samsung had to immediately restrict AI usage across the company to prevent their "secret sauce" from being served up to competitors asking the AI for coding advice. This wasn't a hack; it was a well-intentioned shortcut that turned into a major security liability.

The "Before You Paste" Checklist

To keep your reputation and your clients safe, run through these four points:

Check the Version: Are you using the free/public version? If yes, consider it a public forum. Safe AI usage generally requires a Data Processing Agreement (DPA) that explicitly forbids using your data for training.

Read the NDA: Does your client contract allow for third-party processing? Most standard agreements haven't caught up to AI, meaning you might be in technical breach of contract the moment you paste.

Practice Data Minimization: If you need the AI's logic but not its memory, strip out the specifics. Use "Client X" or "Company Alpha" instead of real names.

Evaluate the Stakes: If this data were leaked, would it cause financial or reputational ruin? If the answer is yes, it doesn't belong in a cloud-based AI.

The Lumensafe Perspective: Hardening the Human Layer

At Lumensafe, we don’t believe in banning AI; we believe in building the Human Firewall. Security isn't just a software update, it’s your team's digital intuition.

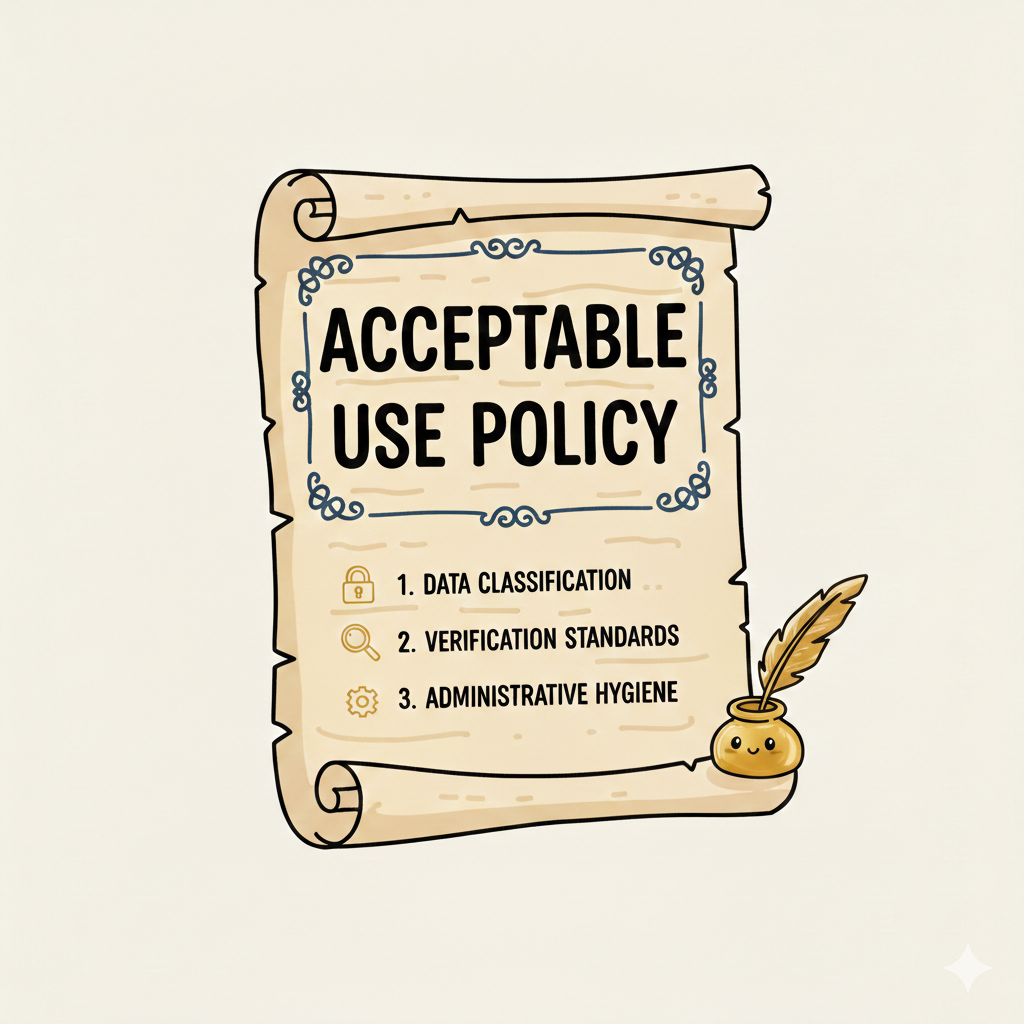

To move from risk to reliability, we recommend a three-pillar Acceptable Use Policy:

Data Classification: Define what is "Safe" (marketing copy), "Internal" (secured tools only), and "Strictly Prohibited" (PII or financial records).

Verification Standards: Mandate a "Human-in-the-Loop" for any high-stakes output. AI provides the momentum; you provide the final authority.

Administrative Hygiene: Use only vetted, company-linked accounts and ensure all AI usage aligns with your existing legal and tax identifiers, such as your Wirtschafts-Identifikationsnummer.

AI is a high-speed drafting partner, but your oversight ensures you work safely without breaking trust.

How can we help you build an even more solid security culture? Let’s chat!